Enikő Márton: The Evolution of Design Techniques

The Evolution of Design Techniques

The objects in our artificial environment are created by design and implementation. In other words, they are the result of a top-down material organisation process. A new structure is introduced into an existing fabric, and must adjust to the global laws of the system already present. It must fit into its local environment as well as conforming to the elements that comprise its own structure. While traditionally the building units of such an object are organised in space using drawings, today this process of materialisation is increasingly regulated by digital information1 , which makes possible the emergence of objects built on much more complex systems of rules.

Changes in our society, and our cultural predisposition and receptivity are closely linked with the achievements and dominant views of science, together with their unavoidable influence on architectural innovations and trends. This was also the case with the appearance of computers and computer science. The emergence of digital technologies marks the end of (1) normative architectural design2 . The initial use of computers as tools of representation and documentation led designers to process-oriented, (2) generative design practice, and then to (3) design techniques modelling adaptive, dynamic systems.

Although scientific progress inspires and aids designers both on a material (geometrical and physical) and a theoretical (conceptual) level, the majority of the envisaged objects only exist in virtual space or in the form of table-top models, and the theory behind new design techniques is rather unacademic. Computer-aided design, however, provides architecture not only with a new tool, but also a new opportunity to rethink itself in a new context.

(1) Design process with full knowledge of the whole

In a traditional scenario, during the design phase an idea or a final solution for the design problem surfaces in the designer’s mind; though impressively multi-faceted and broad, this is also limited. The information produced about this then serves to describe the physical and spatial appearance – or to use a biological analogy, phenotype – of the object. This type of material organisation is an exclusively top-down process, at the end point of which a physically stable, passive condition and a specific entity comes into being. In this organisational model, the relationship of the parts (building units) and the development of details can only be determined within full knowledge of the whole. First, a concept pertaining to the whole of the building is formulated in the designer’s mind, and then the interrelationship and spatial positioning of the components unfold during the design process. This is what we refer to as a normative design process in current architectural practice.

For representation at the physical level, designers have axiom-based Euclidean geometry at their disposal for the preparation of the graphic information – plans, sections and elevations – required for project implementation. The applied building units, the technologies and the geometry required for delineation all result in forms consisting of planar elements.

On a theoretical level, the process of normative design is most similar to classical Newtonian science. Depending on the initial condition, by applying the given rules the outcome of events can be calculated; chance has no role in such a system. The world works according to a kind of cosmic clockwork. This mechanistic worldview allows not only for the prediction of the future – the materialisation of the object to be designed – but also for the precise unravelling of past events: the function to be filled. This is a mechanism of necessity, which produces the appropriate solution for a given demand.

(2) Whole generated by dynamic processes

Experiments and theories of thermodynamics in the early nineteenth century did much to undermine this mechanistic perspective. Then at the beginning of the twentieth century Einstein helped us see that this cosmic mechanism was not at all what we had imagined – in fact what it was really like depended on where you stood within it. It still worked deterministically (like clockwork), but the theory of necessity gave way to the theory of a mechanism of possibilities which did not yield a single outcome, but continuously generated new results.

With the help of computers and digital codes, machines performing increasingly complex operations can be modelled. Although the processes that take place inside these are still deterministic, it would take a considerable length time – or it would be impossible – to calculate the temporally changing results without a computer. The design practice which instead of supplying a single solution for a problem produces a method or procedure that is capable of generating an infinite number of solutions runs counter to the traditional approach. These methods – regardless of whether the designer uses an analogue or digital technique – are rule-based processes that create temporally changing, complex structures.

Structures created by manual means

Just as the use of computers in design will not necessarily yield generative processes, their use is not a requirement for generative design processes. If we wish to design a planar pattern by colouring certain squares on a gridded, white sheet of paper, we can do so either by relying on our sense of aesthetics when choosing which square to colour, or by setting up rules for the process of pattern formation. Obviously the simplest rule would require each white field to be followed by a black one. Or we can go as far as multifactorial mathematical algorithms that generate patterns which are considerably more complicated than that of a chessboard.3

Spatial units – such as a plastic cup or even a folded paper shape – can replace planar elements which create three-dimensional structures in accordance with a sequence of rules we have invented. The photographs, which were taken at the FAB-RIC workshop organised for the students of MOME4 , show examples of this. As a first step towards creating physical algorithm-based systems, students manipulated the shape of plastic cups by cutting, folding and twisting. Then, using metal staples, they created simple patterns followed by complicated three-dimensional shapes. The construction of structures is influenced not only by the rules of sequencing and linking between individual components, but also by the laws of physics and the quality of the building units’ material.

Figure 1. Patterns and forms of transformed plastic cups generated by students of FAB-RIC Workshop, MOME, Budapest, 2007.

The other option is to treat the gridded paper as a surface rather than a multiplicity of individual fields. The designed algorithm regulates the shifting of individual points of the surface in space. It is on this model that origami, or the design of form through folding paper, is built. The sequence and shape – in other words the relationship between surface points – can be defined by rules.5

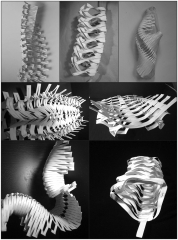

While origami operates through folding, we can also apply other manual algorithms by treating the surface as continuous substance. We can initiate transformations by making cuts and folding the cut parts, by connecting distant surface points, and even by a combination of these actions. Here too the global shape is formed by applying local rules. The significant difference, however, is that while in the former case we elaborated individual elements into coherent systems, in the latter case we altered the relationship between the elements (points) of a coherent system. The photographs illustrate this point by showing form studies made from single, continuous paper surfaces.6

Figure 2. Cutted, folded, stapled forms created out of a single sheet of paper. Models of Enikő Márton.

Structures generated by digital means

While descriptions of hyperbolic geometry had already appeared – linked to such names as János Bolyai, Nikolai Ivanovich Lobachevsky and Karl Friedrich Gauss – at the beginning of the nineteenth century as a first step towards the calculability of non-Euclidean geometries and topologic surfaces, the precise description and representation of these in design was not possible prior to the emergence of three-dimensional computer modelling. The use of digital modelling software not only simplified the use of curved surfaces in architectural design, but also made possible the transformation of modelled forms – without interrupting the continuity of the material.

Taking advantage of the time-dependent manipulability of flexible surfaces which can be modelled by animation software and which retain their topological, spatial continuity, the architect Greg Lynn experiments with the transformation of various shapes in virtual space – or, in his words, animates forms.7 The surface points of topological shapes can be calculated using vectors, thus making the spatial shifting of a single surface point possible; however, this also means displacing adjacent points, all the while retaining the continuity of the surface. These transformations generate new shapes by application of the rules – the series of steps – established by the designer to answer the specific design problem.

Macro-level techniques relating to the whole shape and micro-level procedures transforming the shape’s constituent units can both be employed. While in the former case the transformation results in an alteration of the parameters of the individual surface points, in the latter case it changes the specifications of the structural components. If what we are altering is not the form itself – as when using animation software, for example – but generating new form by altering the elements that comprise it, we are talking about parametric design.

As parametric design is associated with the use of geometries that can be defined in terms of algorithms, it requires the use of parametric software. Branko Kolarevic uses the example of an international London railway terminal (Waterloo Station) designed by Nicholas Grimshaw and Partners as a demonstration of parametric design.8 To roof the terminal, 36 three-pin bow-string arches were used, all differing in dimensions and parameters but similar in form. The angle of hinge points and arches changes slightly for each successive element, and the whole is clad with a continuous surface, providing a complex, multi-arched form.

As my examples demonstrate, regardless of whether digital or manual techniques are used, whether the whole structure is manipulated or only its units, the subject of the transformations – the material – is a passive building element. Designers work with form-seeking and form-generating processes whose rules fit the design problem. Aside from creating good solutions for the initially-stated problem, more forward-looking designs carrying new possibilities come into being. What happens, however, when the elements that make up the structure play an active part in the transformation?

(3) Whole generated by active parts

When designed – form-generating – transformations spatially order active elements that have their own properties, we are dealing with unpredictable, temporally irreversible, bottom-up processes. Information about the planned object that emerges during the design process no longer describes the external, physical appearance (phenotype). Designers use genetic algorithms to design internal qualities and logics of operation: the genotype, to use another biological term. The aim is no longer to create a static entity, but to bring to life a working, reacting identity. This organisation, like an open system, reacts dynamically to external influences and continuously adapts to its environment: it is responsive.

Corpora is also an example of such a responsive organisation composed of active building units. The behaviour of its component parts determines the form of the whole object in space. Temporal changes in the shape of Corpora are manifestations of the behaviour of the individual elements in relation to each other on the one hand, and the reaction of the whole organisation on the other. The design process begins with the parts and their relationship to one another and then, in accordance with rules, the parts generate the whole object. The generating process as well as the manifestations of this process – in other words, the formulation of the object – is grounded in rules and operates through algorithms.9

The use of genetic algorithms in design takes us to the stage preceding materialisation. Provided that in the future we are able to assign intensive qualities10 – such as distribution of forces and material quality – to computer models, we will get build-able structures that adapt to the laws of statics and physics. Let us imagine that we exchange the building units of Corpora for organic elements. With the advance of nanotechnology and the use of computer coding, not only William Gibson’s vision11 – the almost instantaneous rebuilding of Tokyo with the help of nanotechnology after an earthquake – but also responsive spatially manifesting transformations such as Corpora can be realised.

This vision of the future is based on the modelling of currently-known biological systems. The transformation of design can take us considerably further than the application of the principles of real, natural, biological processes. Just as virtual space creates the possibility for experiments simulating reality on the one hand and the creation of new realities on the other, a kind of artificial biology can manifest in our physical environment, which – through the utilisation of new, non-natural materials and technologies – can create non-natural organisations.

- William J. Mitchell, in his work entitled Constructing an Authentic Architecture of the Digital Era – in Disappearing Architecture: From Real to Virtual to Quantum, edited by Georg Flachbart and Peter Weibel (Birkhauser, Basel, 2005) – details how, as a result of digital design, digital documentation, digitally controlled manufacturing processes, and digital positioning and assembly devices, architectural systems are becoming increasingly complex in structure. A higher level of complexity results in adaptations that are not only more expressive, but also more flexible and sensitive to the location and the demands of the architectural programme. [↩]

- Normative architecture can be defined as traditional architectural practice which satisfies functional and client demands and which is based on the convention of the commission – design – permits – construction sequence . [↩]

- The “Game of Life” – a digital cellular automaton invented in 1970 by British mathematician, John Horton Conway – is a computer model which generates patterns by alternating black and white fields on gridded paper. Its operation depends on the starting conditions. The initial pattern comprises the only input, which the program uses to generate ever newer configurations. What we get is an infinite process that continuously changes over time, and whose momentums are “exhausted” in the process of pattern development. Berlekamp, E.R., Conway, J.H., Guy, R. K.: Winning Ways for your Mathematical Plays (A K Peters Ltd., Natick, 2004). [↩]

- FAB-RIC Workshop, Moholy-Nagy University of Art and Design, Budapest (MOME), October 2007. The first part of a workshop series organised by the author for architects and designers. [↩]

- Robert J. Lang developed a software called Treemaker, which is capable of generating origami patterns through the application of mathematical rules. Lang, R.J. , Origami Design Secrets: Mathematical Methods for an Ancient Art (A K Peters Ltd., Natick, 2003). [↩]

- Chris Perry and Aaron White: Implanting Ambient Interfaces for Collective Invention, graduate design studio, Post-graduate Program in Architecture (Pratt Institute, Brooklyn, NY 2005.) The photographs show the author’s own models. [↩]

- Lynn, G.: Animate Form (Princeton Architectural Press, New York, 1999). [↩]

- Kolarevic, B. (ed.): Architecture in the Digital Age, Design and Manufacturing (Taylor & Francis, New York and London, 2003). [↩]

- The applied rules are effectively descriptions, as the authors discuss as early as the beginning of the 1990s in their writing on “Tartan Worlds”, a software program based on interactive language systems, generating two-dimensional patterns: or, as they referred to them, designs. These rules apply, on the one hand to the description of conditions that must be present in order for the rule to be applicable, and on the other to the description of events that take place as long as the conditions are adequate. Thus the “design process”, as the authors put it, is but a transformation from a known position or condition to an unknown one. Depending on the algorithms, this transformation can then have various levels, sequences and derivations. Robert F. Woodbury, Antony D. Radford, Paul N. Talip, and Simon Coppins: Tartan Worlds: A Generative Symbol Grammar System, edited by Karen M. Kensek and Douglas Noble (Computer Supported Design in Architecture, ACADIA, 1992). [↩]

- Manuel DeLanda writes about Gilles Deleuze’s thoughts on intensive and extensive qualities. Extensive qualities are those that are spatially divisible, such as length, volume and weight. Thus, if I divide a piece of material in half, then half of it will have half the size and weight. Intensive qualities, however, are those which are indivisible. One cannot divide a liquid with a temperature of 90°C into two substances of 45°C. According to DeLanda, the tensions and structural forces within architecture are such intensive qualities. DeLanda M.: Deleuze and the Use of the Genetic Algorithm in Architecture (Architectural Design Vol 72, John Wiley and Sons Limited , London, 2002). [↩]

- Gibson, W., Idoru (Berkley Book, New York 1996) [↩]

Leave a Reply

You must be logged in to post a comment.